[dropcap]T[/dropcap]he booster said, “I am a great forecaster. I can read your mind. Think of three numbers and write them on the board.” I write seven, three, and nine. “Okay, you’re thinking seven, three, and let me see nine!” It is hard not to believe in this kind of ‘magic’. Right?

This is obviously nuts. Would you hire this person to invest your money based on this “proof” of forecasting skills? It is no test of insight or foresight if you are given the answer first. This blatant case of “data dredging” — looking at the data you want to foretell first — can make many a quantitative method look prophetic.

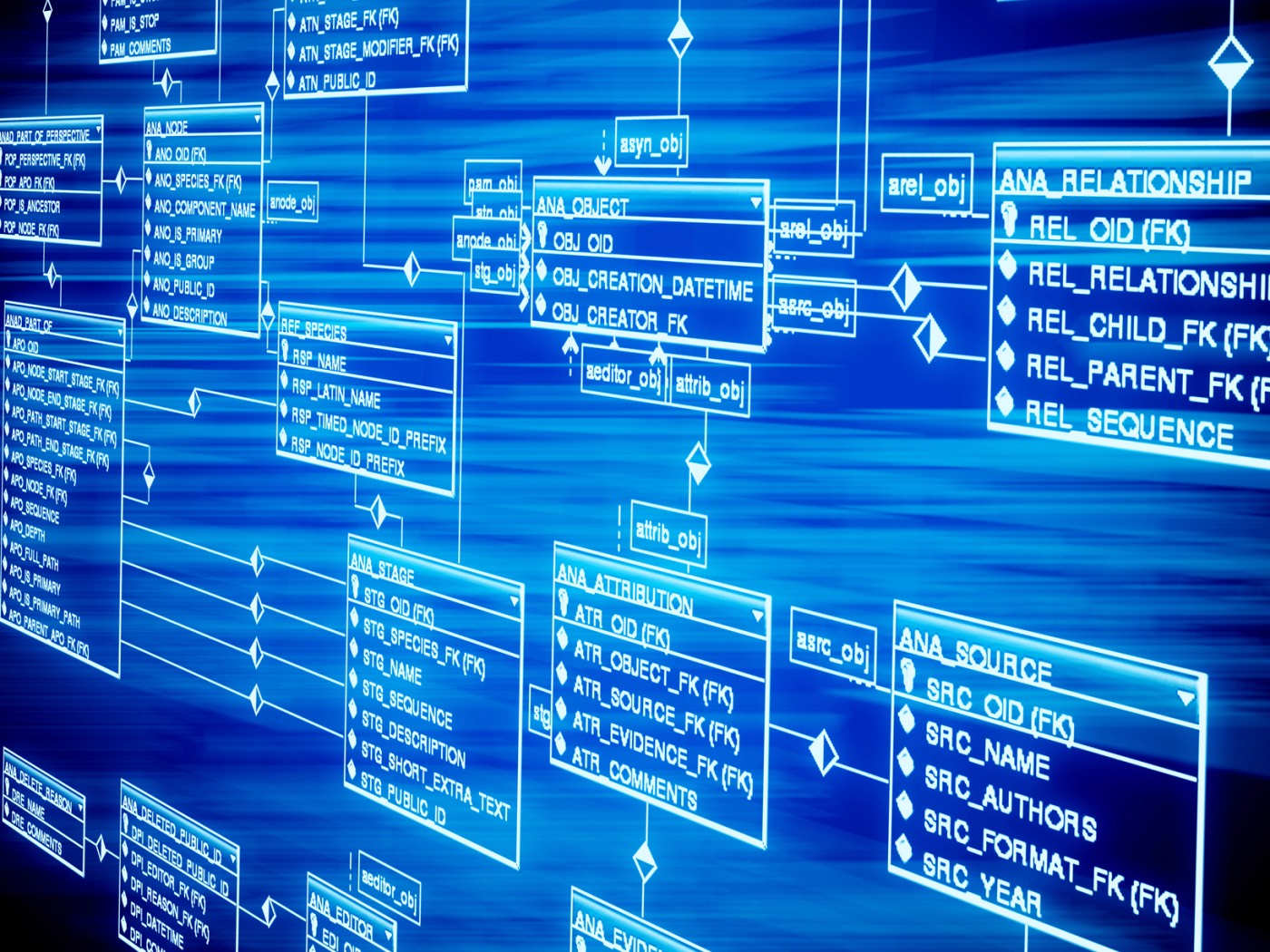

Yet, all too often, data mining models are used in important business decisions with essentially no more proof. Only subtleties in method and process mask the data dredging going on.

Still, being able to describe what has already happened is no measure of a forecaster’s mettle. All too often, all available data is run through a number of mining variations, and the variation that fits best is picked for implementation. All you are doing is picking the model that can give the best answer when it already knows the answer. The real test is when the answer is unknown. Wouldn’t know when confidence in a technique, method, or model is warranted be nice?

Confidence Consequences

In business and financial contexts, your level of confidence greatly affects which actions you take: how much money to invest and for how long, what percentage of capital to risk, the time horizon of acceptance, stability against criticism, and competing views. Your confidence in techniques also affects your ability to generate investors, partners, allies, and excited workers, as well as your ability to manage and execute the given plan.

Because models are increasingly a key determinant of corporate profitability, the issue of whether your confidence is justified becomes intimately connected with an enterprise’s success. Stories about failures from false confidence abound. Equally devastating is inadequate confidence in a good thing. The range of issues that allow well-founded confidence in a modeling approach is complex. Ultimately, the implicit and explicit implications of the model, understandings of, experience with, and the nature of the data, as well as the understanding of customer relationship management, in reality, form a triangle where each should reinforce and confirm the others.

Unfortunately, matters have deteriorated with the advent of exceedingly large datasets and the automated model production methods of data mining; their true natures can be highly opaque.

Testing To The Rescue

But companies do have at their disposal an excellent confidence-generating method that is easy to understand, systematically implementable, but often underutilized: adequate testing.

Companies that make physical products know to maximally test products in the laboratory before real customers start using them. Flaws can subject the company to irreparable reputation damages as well as lawsuits. Would the makers of an automobile tweak the power-steering mechanism just before production without a test drive? But the equivalent is all too common in the model-making world.

To do testing in a way that is both well-founded and offers a serious boost in confidence requires recognizing that data is generally a nonrenewable resource, and just as with other valuable resources, systematic planning is required for optimal use. Adequate testing requires planning your research process and being ready for most contingencies with an adequate number of validation and truly unused holdout test samples. The nice clear paradigms seen in many research papers don’t reflect the complexities of real-world model development.

Back To The Classics

To help you understand these complexities in the more rarefied realm of data mining, take a look at the paradigm of classical statistical testing. Simply stated, the idea is to generate a hypothesis, usually motivated by some real-world understanding and question. For example, drug x lowers blood pressure because other compounds in its family do.